How to Conduct a Landing Page Split Test

This is an excerpt from HubSpot’s ebook “An Introduction to A/B Testing.” To learn more about split testing, download your free copy.

By Maggie Georgieva

With landing page A/B testing, you have one URL and two or more versions of the page. When you send traffic to that URL, visitors will be randomly sent to one of your variations. Standard landing page A/B testing tools remember which page the reader landed on and will keep showing that page to the user. For statistical validity, split tests need to set a cookie on each visitor to ensure the visitor sees the same variation each time they go to the tested page. This is how HubSpot’s advanced landing pages and Google’s Website Optimizer work.

With landing page A/B testing, you have one URL and two or more versions of the page. When you send traffic to that URL, visitors will be randomly sent to one of your variations. Standard landing page A/B testing tools remember which page the reader landed on and will keep showing that page to the user. For statistical validity, split tests need to set a cookie on each visitor to ensure the visitor sees the same variation each time they go to the tested page. This is how HubSpot’s advanced landing pages and Google’s Website Optimizer work.

HubSpot’s advanced landing pages enable you to create A/B tests and track a number of metrics to evaluate how your experiment is performing. It keeps a record of the number of people who have viewed each variation and number of the people who took the intended action. For example, it might inform you that each of your landing page variations was viewed 180 times, with the top-performing one generating 20 clicks and the lowest performing one generating five clicks. While this information is important, it’s not enough to make a decision about whether or not your results were significant.

Determine Statistical Significance

From a high-level view, you can probably tell if your test results are significant or not. If the difference between the tests is very small, it may be that the variable you tested just doesn’t influence the behavior of your viewers.

However, it’s important to test your results for statistical significance from a mathematical perspective. There are a number of online calculators you can use for this, including:

- HubSpot’s A/B Testing Calculator

- Split Test Calculator

- User Effect’s Split Test Calculator and Decision Tool

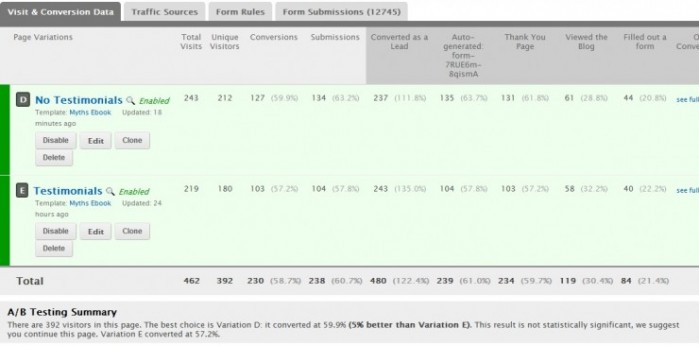

The screenshot below is an example of a landing page test that we ran at HubSpot using our advanced tool. In this example, we wanted to see if including testimonials for one of our ebooks would positively affect conversions. From the data below, we are able to see how many visitors each of the variations received, and the corresponding number of form submissions for each.

The tool tells us that the best choice is Variation D: it converted at 59.9% (2.7% better than Variation E). However, we are also informed that this result is not statistically significant, which encourages us to continue this test. This led us to the conclusion that the difference between our tests is too small to affect the behavior of viewers.

Testing Is about Measuring over Time

You may be tempted to check your campaign every day to try to figure out the results of your A/B test. This is okay, but don’t draw any conclusions from this information just yet. The reason you can’t make decisions yet is that you wouldn’t be fully measuring your A/B test, only monitoring it. It’s like deciding you should become a pro baseball player based on a Little League game where you hit a home run. Measurement looks at trends over time – wait a significant amount of time before analyzing your results.

So how long should you wait? First, make sure you have sent enough traffic to the pages. (That is often a tough task for small businesses.) For a landing page A/B test, it’s a good idea to wait about 15-30 days before evaluating your results. This will allow you to see trends as well as any issues that may have popped up. It should also give you enough data to come up with an accurate conclusion.

Even after you’ve waited for 15-30 days and sent a lot of traffic to your test, if you haven’t seen a statistically significant result, then the difference probably wouldn’t make a big impact on conversions. Don’t be afraid to move on to another, more radical experiment.

If At First You Don’t Succeed, Try Again

You may conduct an A/B test but not find statistically significant results. This doesn’t mean that your A/B test has failed. Figure out a new iteration on your next test. For example, consider testing the same variable again with different variations, and see if that makes a difference. If not, that variable may have little bearing on your conversion rates, but there may be another element on your page that you can adjust to increase leads.

Effective A/B testing focuses upon continuous improvement. Remember that as long as you keep working towards improvement, you’re going in the right direction.

This is a guest post by Magdalena Georgieva, an inbound marketing manager at HubSpot. HubSpot is a marketing software company based in Cambridge, MA that makes inbound marketing and lead management software.